AI Agent Cloud Game Testing

Designing an API-first system that builds trust in AI agents and test results.

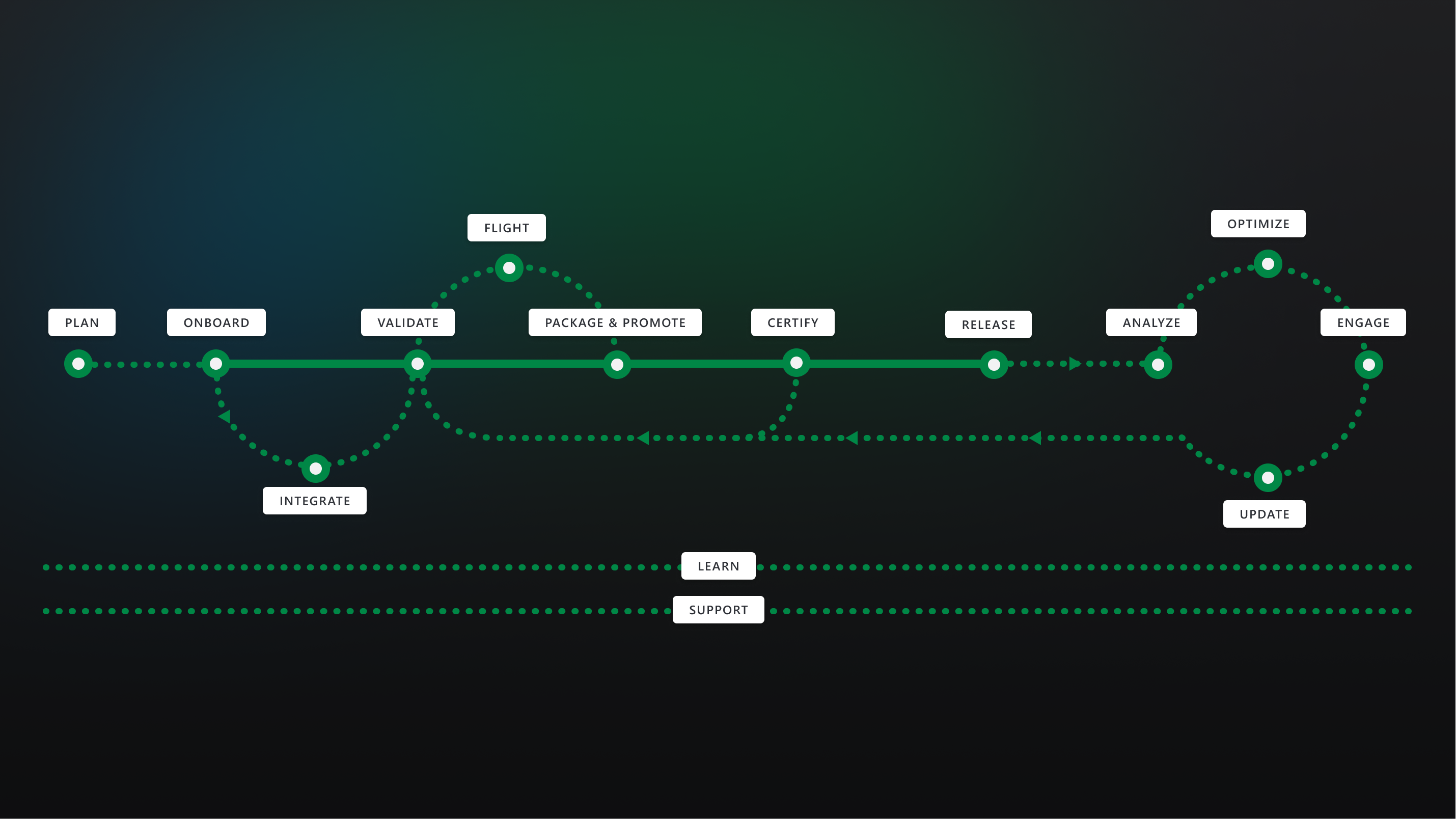

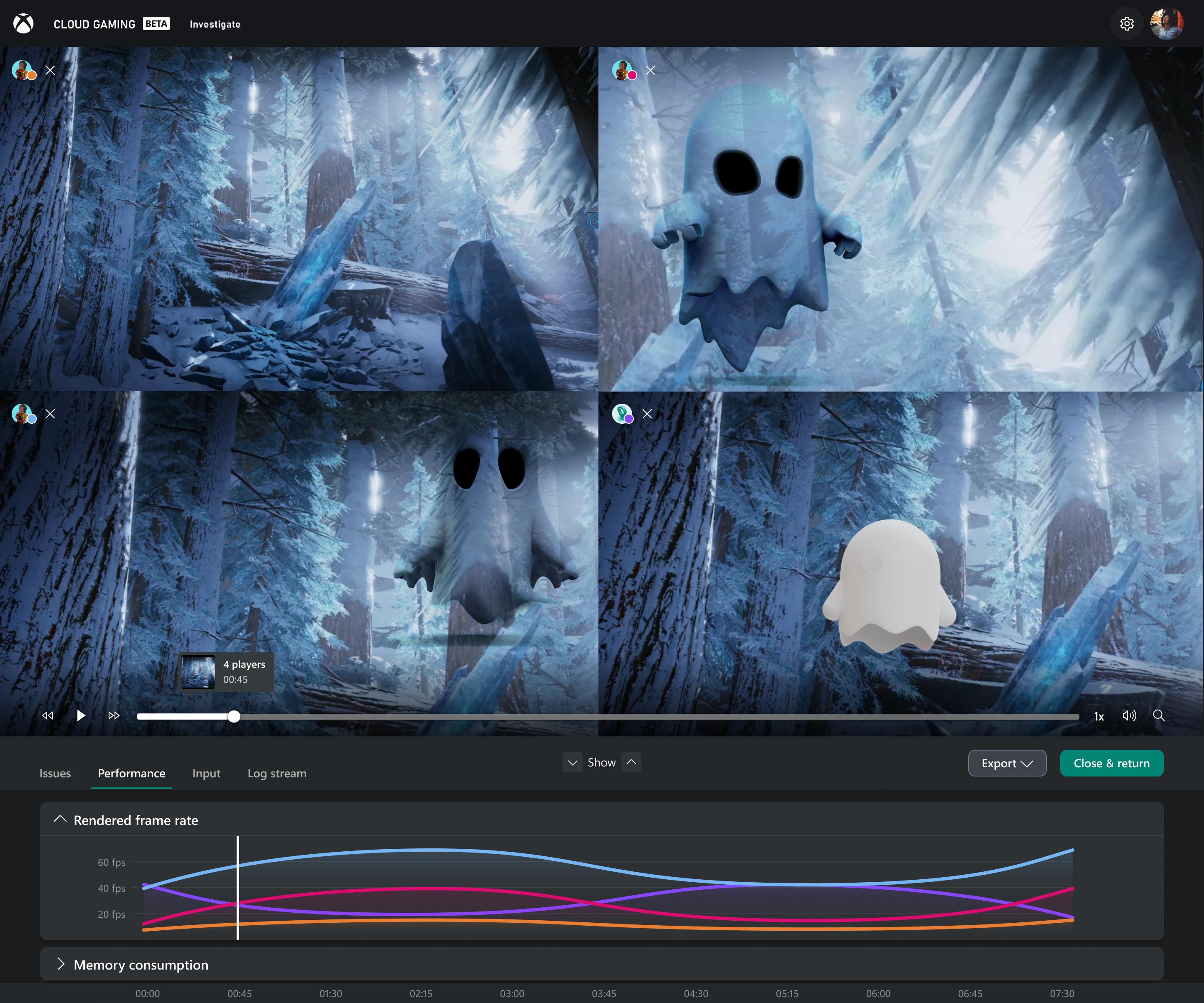

I led the design vision for Xbox's Cloud & AI Game Testing initiative, creating an API-first interface for cloud dev kits and defining the earliest AI-integrated testing workflows for game creators. My work unified previously fractured systems (and projects), enabled cross-team integrations, and established the foundation for automated, AI-driven game-testing agents.

Adopted by

Challenge

Friction slowing creative momentum

Game creators were slowed by inefficient manual testing and constant friction when configuring dev kits across platforms. Tools were fragmented, hard to navigate, and made it difficult to identify critical cross-platform issues. The initiative aimed to use cloud capabilities to simplify dev-kit access, introduce AI test agents, and build trust in AI-generated test results—allowing creators to stay focused on game quality instead of infrastructure.

Divergence

Uncovering what QA truly needs

Through a series of interviews and hands-on workshops, it became clear that creators cared primarily about the art of the game, not the overhead around testing. They needed reliable, cross-platform testing and reporting without gaps or blind spots—especially before they would adopt any AI-driven workflow. Reliability and transparency became non-negotiable.

Ideation

Designing the system before the UX

I began with an API-driven MVP to define the underlying testing system before introducing UI. Using Figma, I shaped early interface proposals and collaborated directly with engineers across multiple organizations to verify feasibility; and then designed north-star explorations that showed how AI agents could meaningfully augment future testing workflows.

Convergence

Three teams, one shared future

I prototyped the cloud-dev-kit interface and early AI-assisted testing flows; mapping the full workflow revealed that the Cloud Dev Kit, AI-Agent, and auto certification teams were each unknowingly building pieces of the same future system. By visualizing the end-to-end experience, I brought all three groups together around one shared technical vision. Studio engineers and technical directors validated the concepts and helped refine them into a platform-agnostic workflow that could slot cleanly into existing pipelines.

Solution

Architecting an AI QA System

The core outputs included a cloud-dev-kit UI proposal, a cross-platform testing-workflow architecture, and a design vision for human-AI collaboration. Together, these formed the foundation for accurate, transparent, and reliable AI-assisted testing agents and the unified systems required to support them.

Outcome

Alignment that accelerated adoption

The work earned strong support from studio leaders and accelerated adoption across key internal teams, including Greenbelt (cloud dev kits), xShield (auto certification), and AI4GT (AI test agents). It positioned me as a strategic partner in Xbox's emerging AI direction and helped shape the early thinking behind automated AI agents in creator workflows.

"When this solution launches, I would be running around screaming 'hallelujah!'" -First party, technical director

Takeaways

Bridging specialities and expertise

This project showed that deep technical work requires humility, persistence, and comfort with ambiguity. It strengthened my ability to bridge highly specialized teams and reinforced that AI succeeds only when rooted in real creator workflows, transparency, and trust.